Discovering, creating, and sharing digital museum resources: a methodology for understanding the needs and behaviors of student users

Darren Milligan, Smithsonian Institution, USA, Melissa Wadman, Smithsonian Institution, USA, Brian Ausland, Navigation North Learning, USA

Abstract

As museums continue to expand their digitization efforts, many now include a focus on how to understand and improve the impact of access to these previously inaccessible resources. How are different audiences using these collections? How are they impacting the work of scholars, educators, students, and enthusiasts? This paper addresses the potential impact of access to these resources on students through an analysis of an ongoing research and evaluation effort at the Smithsonian. Beginning in 2013, and more intensively throughout 2016, the Smithsonian Center for Learning and Digital Access has explored the potential educational impact of access to its nearly two million digitized museum, library, and archival resources. Their efforts are designed to meet the online needs of this target group via a platform called the Smithsonian Learning Lab, a new Web-based platform (launched in June of 2016) for the discovery and creation of personalized learning experiences. The case study project conducted research with students to make the Learning Lab as useful to them as it has been designed to be for educators. The methodology included student observations and interviews; a literature review focused on online learning and the use of digital materials; environmental scans designed to understand the features of popular online learning systems and social media platforms popular with students; and finally, prototyping with this group in the classroom. The project uncovered some specific approaches to guide the adaptation of the Learning Lab to better meet the needs of students, including approaches that could lead to the development of best practices for enabling educational and personal use of museum digital content by students.Keywords: education, learning, student, youth, digitization, evaluation

Introduction and project history

So far it has been assumed that the students will go to the museum. This is to a great extent desirable, for they should become familiar with the interior of this building as early as possible, but some of the material would be of more practical use if it could be handled in the school classroom. (Farnum, 1919, 195)

There is untapped potential for the abilities of educational technology to connect students with the materials (such as data and primary sources) required to accomplish authentic educational tasks (Lindquist & Long, 2011). However, while there have been studies over the years of how young people experience learning in museums, there is limited research on the teaching and learning that occurs remotely in classrooms with digital museum resources (Bull, Thompson, et al., 2008). Research has shown that in academically and culturally diverse classrooms, students need both hard scaffolds (e.g., assignments, rubric, and grades) and soft scaffolds (e.g., guidelines and procedures that are geared for learners with varying learning profiles) to explore and create meaningful learning experiences using digital resources and online primary sources (Hernández-Ramos & De La Paz, 2009). This is true in part because access to computers and experience with social media do not necessarily make students competent in navigating the Internet to learn complex tasks (Azevado, et al., 2008). As a result, there is a great necessity to better understand what students themselves need to learn with digital resources (as opposed to what their educators need to construct lessons) in terms of access to, structures for, and differentiated expectations to successfully work with museum digital resources.

The Smithsonian has long strived to meet the needs of teachers, first through the development of field trip programming and enrichment materials for classrooms visiting museums. Originally through print and later through the Web, the Smithsonian developed and distributed lessons plans focused on exhibitions, research, and its mission. In 2003 it developed a central website portal, SmithsonianEducation.org, to centralize access to these resources (to provide, in essence, a “one stop shop” for teachers interested in incorporating Smithsonian resources into their classrooms). In 2011, the staff at the Smithsonian Center for Learning and Digital Access (SCLDA) began to consider a redesign to this site (which had grown to include a substantial database of more than 2,000 records describing educational content from across the Smithsonian’s museums, research centers, libraries, and archives), which raised questions about how the site’s resources were being used and how to make more Smithsonian digital assets available.

Smithsonian digitization

While the Smithsonian had been digitizing its collections for several years, the approach was reactive rather than strategic—to support an exhibition or publication or respond to a request from the public. The nature of this work changed as digitization efforts began to receive substantial support from academic, cultural, and funding agencies. Punzalan and Butler (2014) at the University of Maryland provide a thorough overview of this evolution, in which digitization and the development of mechanisms to increase access are part of the “day-to-day performance of heritage work.” At the time of writing, the Smithsonian has a robust digitization program encompassing not only collection objects, but also library and archival materials:

Museum objects and specimens:

- 154 million in collection

- 26 million represented by electronic records

- 2.5 million represented by digital surrogates

Library volumes:

- 2 million in collection

- 1.4 million represented by electronic records

- 28,000 represented by digital surrogates

Archives:

- 156,000 total cubic feet

- 80,000 represented by electronic records

- 26,000 represented by digital surrogates

The overall digitization plan’s scope encompasses the development of digital surrogates for more than 13 million objects, 700,000 library volumes, and 80,000 cubic feet of archival materials (more information on Smithsonian digitization is available on the Smithsonian public dashboard at http://dashboard.si.edu/digitization). That is a lot of digital material. The SmithsonianEducation.org website was a portal to a manageable number of vetted lesson plans and other instructional materials, but a new site that would include many more and different resources, such as digital surrogates for objects and specimens, would have to be based on research into how educators could use such items.

Research with teachers

To address this opportunity, the SCLDA team developed an evaluation and research program. This addressed both the nature of educational use of digitized or digitally-available museum, library, and archival resources, as well as the platforms on which they are presented. The results of five independent studies focused specifically on targeted educators’ preferences in the areas of search, digital learning resource structure, and platform content and functionality (Milligan & Wadman, 2015).

Part of the data collection took place during weeklong workshops for elementary, middle, and high school teachers from a wide variety of disciplines. Those teachers came to Washington, D.C. along with Smithsonian museum educators to learn how to incorporate primary sources and object-based learning approaches into their curriculum. Within this context, an hour a day was dedicated to prototyping (first with paper and eventually with a digital prototype) a new mechanism to deliver and enable the use of these digital resources in their own classrooms. While teachers across grade levels expressed near universal enthusiasm over the prospect of access to such a cornucopia of resources, several expressed reservations. One high school teacher succinctly said “This is a great resource and I can see its value almost immediately, but I will never use it.” His statement, which was a lightbulb moment for the SCLDA project team, was not one of defiance, but rather an acknowledgment that the skills needed to make use of the prototype: digital/online research, analysis of metadata, structuring of resources using evidence, etc., were the skills he was attempting to develop in his students. In his mind, they were the ideal end user.

Alignment to national standards

This teacher was not alone. Most national teacher standards across disciplines place an emphasis on both students’ use of digital tools for presentation or demonstration of work, and on the students’ central role as researcher, creator, and curator. The Common Core State Standards for English Language Arts call for students to be able to interpret a wide range of texts and other sources in a variety of contexts, and to utilize that information for a variety of purposes. Specifically, they call for students to:

- Make strategic use of digital media and visual displays of data to express information and enhance understanding of presentations (Speaking and Listening Anchor Standard 5)

- Integrate and evaluate content presented in diverse media and formats, including visually and quantitatively, as well as in words (Reading Anchor Standard 7)

- Conduct short as well as more sustained research projects based on focused questions, demonstrating understanding of the subject under investigation (Writing Anchor Standard 7)

- Gather relevant information from multiple print and digital sources, assess the credibility and accuracy of each source, and integrate the information while avoiding plagiarism (Writing Anchor Standard 8)

The Next Generation Science Standards call for the ability to apply a variety of science and engineering practices, including planning and carrying out investigations. Specifically:

- Practice 3 focuses on “careful observation and description [that] often lead to identification of features that need to be explained or questions that need to be explored” (NRC, 2012b, 59). Such investigations can include study of high-quality photographs, audio, video, and other digital resources from the natural and scientific worlds.

The College, Career, and Civic Life (C3) Framework for Social Studies State Standards is focused on inquiry, including the ability to develop questions and plan investigations; apply tools and concepts; evaluate sources and use evidence; communicate conclusions; and take informed action.

- Dimension 3 focuses on “the skills students need to analyze information and come to conclusions. These skills focus on gathering and evaluating sources, developing claims, and using evidence to support those claims” (National Council for the Social Studies, 2013, 53).

Well-designed educational resources and platforms that contain authentic digital resources provide rich opportunities for students to develop these skills while simultaneously deepening their understanding of subject-area content (Interactive Educational Systems Design, 2017). The prototyping high school teacher clearly saw the museum resources, and the platform we were testing, as a student tool, not one that should be exclusively made available to teachers. This prototype platform eventually became what is now called the Smithsonian Learning Lab.

Case study project introduction

The Smithsonian Learning Lab (SLL) project is the result of a substantial rethinking of how the digital resources from across the Smithsonian’s nineteen museums, nine major research centers, the National Zoo, and more, can be used together for learning (Milligan, 2014). It aspires to make these resources more accessible and more useful to teachers, students, parents, and anyone seeking to learn more. The Learning Lab is a Web-accessible platform that enables the discovery, by teachers and learners of all types, of millions of digital resources (digitized collections, videos, podcasts, blog posts, interactives, etc.) from the Smithsonian’s galleries, museums, libraries, and archives. It contains research-based tools that aid its users in the customization of its contents for personalized learning. Users of the Lab construct their own “collections” of resources, built by aggregating Smithsonian resources, uploading their own, and augmenting these resources through annotations, assessments, and modifications to existing metadata. The project launched in June of 2016 at https://learninglab.si.edu.

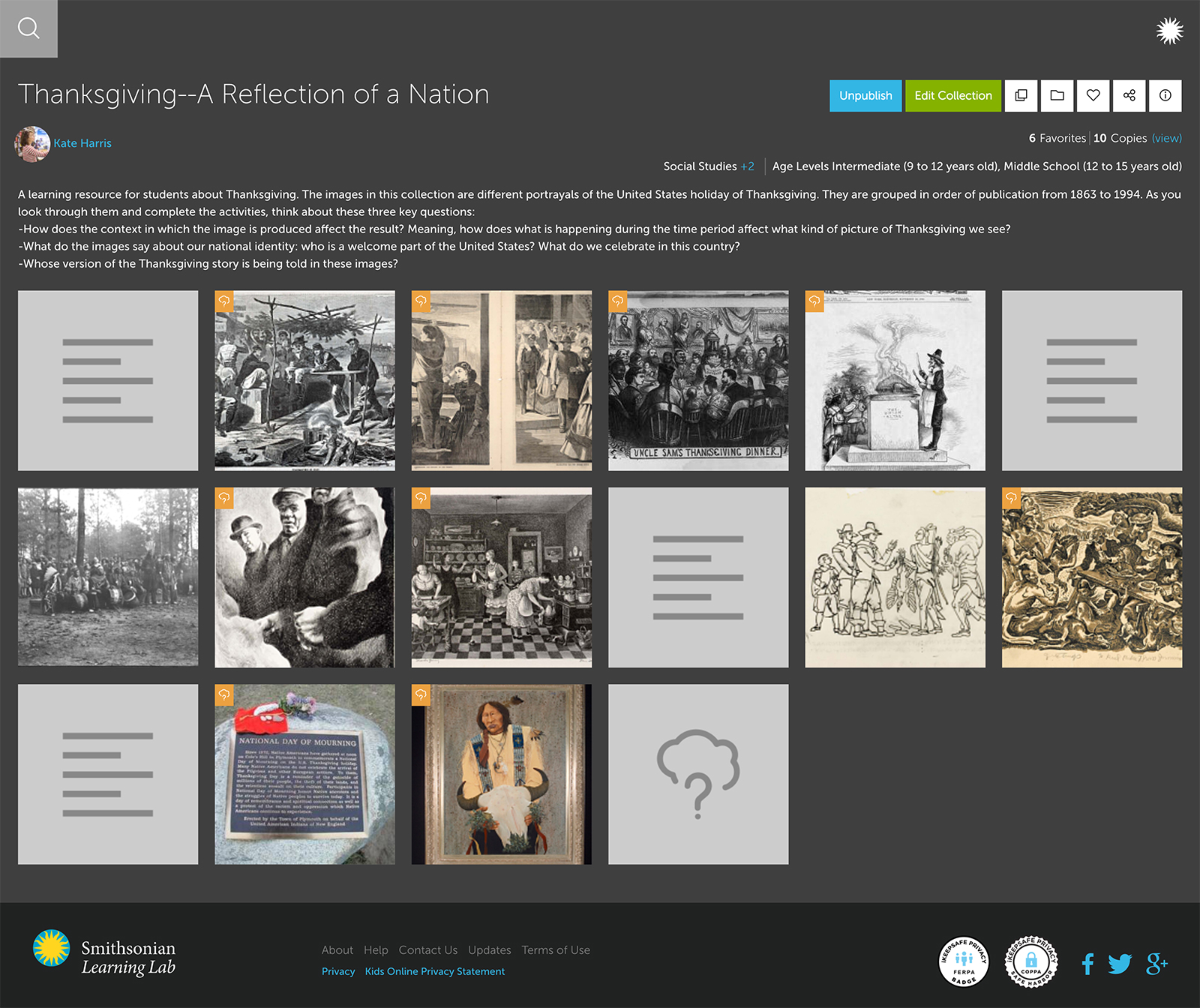

Figure 1: a Smithsonian Learning Lab “collection” created by a teacher incorporating Smithsonian collection objects, uploaded resources from other websites, and user-created annotations and assessments. Available at http://learninglab.si.edu/q/ll-c/b8W1UbCW2mhRrra5.

As described above, the research that led to the features of the SLL were based entirely on our understanding of how teachers might make use of these resources to construct their own lessons. To develop a fuller understanding of how these teachers’ students might do the same, new questions needed to be asked. The project team addressed some key questions around methods for enhancing student motivation and engagement with digital content and tools. With that context and these goals in mind, the specific research questions were:

- What are the ways that students engage with digital content in academic settings?

- What are the motivations for student use of digital content?

- What are the interface requirements/scaffolds needed to enable and enhance student engagement with rich digital resources?

Project methodology

The formative research methodology involved a multi-pronged approach to correlate classroom observations and interviews with students using the SLL, academic studies of digital learning, and an analysis of the competitive environment for both learning and social platforms. The data were analyzed to identify trends among these varied sources. The project team selected the most pertinent trends to test with additional middle and high school students in the classroom. The findings from each of these components were then utilized to suggest functional enhancements to the SLL. The details of each approach are described below.

Classroom observations

Systematic observations were conducted between February and May of 2016 to capture student engagement with the SLL with teacher-created and mediated learning experiences. The sample included 33 classrooms in 18 schools in Pittsburgh, Pennsylvania, whose teachers were part of another Smithsonian research project. The observations took place over one class period in which the entire classroom was observed in order to identify activities where students directly interacted with the SLL on a device, either individually or in groups. The methodology followed best practice for peer observations by using a checklist and rating scale as well as an open-ended written response for observers to complete. Engagement was scored using a five-point scale across five different categories including positive body language, consistent focus, verbal participation, student confidence, excitement, and overall engagement (Jones, 2009). Observers completed 27 observations of students interacting with the SLL. The observation data was analyzed through a simple descriptive statistical analysis for the closed-ended scales and by coding the open questions by emerging categories.

Interviews with students

The researchers conducted fifteen interviews with students (11 to 14 year olds) from the classrooms mentioned above to document and evaluate student engagement with the digital content and tools on the SLL. The interview protocol consisted of 16 open-ended questions related to students’ experiences using the SLL, their experiences using other types of websites for learning, and what ways students would describe how they learn outside of school.

The interview subjects were selected using purposeful sampling/expert sampling (students could be enrolled only if they had used the SLL and had parental consent). Classrooms of students were chosen based on: (1) the type of interaction students had independently with the SLL (i.e., they had to have used the SLL individually or in small groups on individual devices, rather than presentation-based or whole-class usage), and (2) a maximum of 12 weeks passing between use of SLL and the interview (to ensure students’ memory of the SLL). The qualitative student interview data were analyzed through an open-coding approach to identify emergent themes and nuances.

Literature review

A general scan was conducted focusing on relevant research, reports, and articles targeting online learning and use of digital content for both formal and informal learning. Given that the project had identified classroom teachers as an initial primary audience in which to gain access to young learners, priority was given to studies, observations, use-cases, and derivative findings that posed the greatest potential applicability to students operating in more formal, structured environments, such as classrooms. The age range targeted for the review was 13 to 17 year olds; students in this group can consent to having personal accounts on both online learning systems and social media platforms.

While soliciting research, reports, and case studies, it was clear that both researchers and practitioners see technology-supported learning as a constantly evolving field in need of more detailed examination (Roblyer, 2005). In exploring the role of technology in learning, challenges exist in organizing fairly disparate research into coherent, less comprehensive categories that persist across a growing array of implementations, populations, constantly changing technology products, and systems. Therefore, we chose a set of broad categories in which to frame the current state of K–12 online supported learning based on the review of relevant literature. The literature review distilled this selected research into the categories of content, pedagogy, and platform, by borrowing terminology and definitions from the International Association for K–12 Online Learning’s student-centered TPAC framework (iNACOL, 2016):

Content

Includes the types of learning resources and existing content made available to students through the system, in addition to the content, resources, and assessments that can be generated by educators with the system authoring and uploading tools.

Pedagogy

Pertains to the methods in which the system is set to organize curriculum, sequence instruction, and scaffold existing descriptive content and user-generated content in relation to resources, assessment, and learner access.

Platform

Refers to the architecture, tools, user experience, and interface design used to navigate, search, store, annotate, author, collaborate, communicate, and share.

Environmental scan

Two distinct environmental scans were performed to address both digital learning systems (defined as Web-based environments designed to help users access direct learning content/support resources as individual learners or part of a learning community) as well as social media systems that are most popular and most useful to target youth (both for personal and academic purposes).

Digital learning systems

In the area of digital learning systems/platforms, all systems identified were assessed for various features and tools across four distinct categories. Systems analyzed were initially selected from widely used platforms supporting users in the targeted age ranges in both formal and informal learning environments (Glowa & Goodell, 2016; iNACOL, 2015; SETDA, 2015). Project team members selected ten comparable platforms that focused on use of open education resources and structured and semi-structured learning activities, and then distributed tools allowing the teacher and learner to modify existing materials or engage in self-assembly of learning activities. The chosen platforms were Blendspace, BrainPop, DIY, DocsTeach, D2L, Gooru, Khan Academy, OER Commons, OpenED, and TED-ED (as well as the SLL).

The features examined were chosen based on an analysis of dominant features found across these systems. The review assessed each system for the presence of specific features or tools, but did not attempt to assess relative effectiveness. (The data collection instruments for the digital learning systems scan can be found here: http://s.si.edu/sllyouthenviro1 and http://s.si.edu/sllyouthenviro2.)

Social media systems

In the area of social media systems/platforms, those chosen were initially cited as most used by teens across the United States according to a number of comprehensive publications (Elgersma, 2016; Ito, 2008; Lenhard, 2015). The sites analyzed were Facebook, Google+, Instagram, Kik, Pinterest, Snapchat, Tumblr, Twitter, Vine, and WhatsApp (as well as the SLL).

Similarly to the digital learning systems, the features examined were generated based on an analysis of dominant features in the selected systems. The review determined if a system did or did not have the feature or tool, and did not attempt to determine the relative effectiveness of any one tool.

(The data collection instruments for the social media systems scan can be found here: http://s.si.edu/sllyouthenviro3 and http://s.si.edu/sllyouthenviro4.)

Classroom prototype testing

After triangulating the data from the classroom observations and interviews, the literature review, and the environmental scans, a research protocol was developed for classroom prototyping. This testing occurred in October of 2016 with 13 to 17 year old students in eight different classrooms at three school sites (middle and high school) in Chico, California. Elements identified for testing through the formative stages of the investigation were largely dependent on the students’ interaction with the platform and content. Conversely, those items that were determined as already being developed, or almost exclusively related to inputs of the teacher/designer authoring a SLL collection, were avoided as testing items. Generally, the items selected were those that could be adequately tested and observed in a classroom setting (table 1).

| Potential Element / Feature / Tool to be Tested | Lit. Review | Env. Scan | Student Obsv. | Largely Dependent on Teacher / Designer | Student Controlled / Focused Element | Can Be Readily Prototyped | To Be Tested |

| 1. Learner ability to record information as notes/Share notes |

X |

X | X | X |

+ |

||

| 2. Learner ability to monitor progress/expose progress to others |

X |

X | X | X |

+ |

||

| 3. Students communicate and share work product |

X |

X | X | X |

+ |

||

| 4. User provided modeling on how to best engage community |

X |

X |

– |

||||

| 5. Guiding Questions/Inquiry Structure |

X |

X | X | X |

– |

||

| 6. Citation Tool |

X |

X | X |

– |

|||

| 7. Students can work collaboratively on projects or assignments |

X |

X | X | X | X |

+ |

|

| 8. Prefer access to print-based worksheets or digital version integrated in-system |

X |

X | X | X |

+ |

||

| 9. Share resources to others outside of system |

X |

X |

– |

||||

| 10. Core experience can be embedded in other environment/platform |

X |

– |

Table 1: chart used by the research team to analyze the potential elements, features, or tools to be tested during the classroom prototyping in Chico, California

Case study findings

Student observations and interviews findings

“I liked that it was hands-on and how you could, once you picked your artifact, you could kind of add a hotspot, add information to it and then reflect on the artifact and how it worked, what the artifact’s impact was.” -8th grade student

While this portion of the study revealed that students are already highly engaged with SLL, it also exposed areas for further investigation and suggestions for improvement:

- While students valued the amount and the quality of information accessible through the SLL, they also mentioned the need for more directions and instruction to make use easier and learning possibly deeper.

- The correlation between student-created SLL collections and engagement requires further investigations. With this group of students, a more teacher-driven model was used. The data suggest that if SCLDA wants to encourage more engaging student use of the SLL, there is a need to build in support and examples directly targeting this age group (Corno, 1997).

- Students associated the SLL (as an educational website used for school work within their classes) with activities controlled mainly by their teachers. Further investigation on characteristics and features of museum resource-based digital experiences inside and outside the classroom and their correlation to achieving meaningful learning experiences will be required. (Chung & Tan, 2004; Ito, 2008).

Literature review findings

Findings in this section focus on expanding ways for learners to document their own thinking, monitor their progress while achieving tasks and assignments, and engage in communication as a way to receive feedback and share their work. In reviewing these general trends, the following points represent a summary of key findings and strategies that have substantial research support:

- Learning is more consistent and more readily supports achievement when there is a high level of correlation or alignment between content, objects/resources, visual supports or media, and tasks to aid in persistence and minimize cognitive load (Mayer & Anderson, 1992; Moreno & Mayer, 1999).

- Developing and sustaining an online community focused on inquiry and learning is crucial in helping students access both their instructors and peers (Ito, 2008). Sharing their thinking, their findings, and their learning processes, and having access to those of their peers, helps validate work approach, keeps students engaged, and provides an opportunity to blend social, cognitive, and teaching dynamics (Higgins, 2012).

- Students’ engagement and performance levels increase when quality content and activities are developed by a learner’s own teacher (Squire, 2003).

- Mutual problem-solving or co-development of learning products helps young students make more meaningful connections to their learning and to one another through establishing relationships focused on learning outcomes (Marks, Sibley, & Arbaugh, 2005).

- Presenting students with open-ended, deep, interesting questions and keeping those questions central and accessible to students throughout their inquiry process (Kapa, 2007) helps guide targeted inquiry and progress through complex tasks online (Welch, 2008).

- Having timely feedback on performance (Winters, Greene, & Costich, 2008) from an instructor or even just in the form of external validation of task completion serves as a key motivator and aids student persistence (Wolters & Pintrich, 1998).

- Students bring specific expectations to digitally-supported learning environments, including a desire to personally define how accessing and organizing resources and information works for them, flexibility in engaging the expertise of their instructor and peers, and the freedom to create unique demonstrations of knowledge (Chung & Tan, 2004).

- In the area of personal inquiry and progress monitoring (Hattie & Yates, 2013), visual indicators that document a learner’s progress towards completing online tasks and assist in monitoring that progress can help young students keep on track and motivated and allow them to share their efforts.

Environmental scan findings

Digital learning systems

With a growing reliance on digital resources as a primary content source, many systems are enhancing the tools and features to better capture, store, describe, and deploy more robust resource repositories within their systems. Learner management tools will inherently always be a focused feature set in almost all digital learning environments.

The project team developed a number of visualizations to analyze a comparative set of data where like features/tools did or did not exist in each system. Radar charts were used as the method of presenting data across different learning applications. These provided a means to compare four categories (Resource Management Tools and Features, Learner Actions Tools and Features, Community Collaboration Tools and Features, and Overall Design) as graphed elements simultaneously.

Figure 2: a comparison of the ten identified digital learning systems with their features mapped in the areas of Resource Management Tools and Features, Learner Actions Tools and Features, Community Collaboration Tools and Features, and Overall Design, as compared to the features made available by the Smithsonian Learning Lab.

Numbers of assessed features were tallied and reported in terms of overall % (figure 2):

- Resource Management Tools and Features (systems on average addressed 61.0% of the 40 specific facets assessed)

- Learner Actions Tools and Features (systems on average addressed 60.9% of the 38 specific facets assessed)

- Community Collaboration Tools and Features (systems on average addressed 43.5% of the 20 specific facets assessed)

- Overall Design (UI/UX) Features (systems on average addressed 42.7% of the 22 specific facets assessed)

The category receiving the most tools and development effort across all digital learning platforms reviewed were those directed towards Resource Management Tools and Features with the average system addressing 61% of the assessed features. Sub-elements within this category included:

- Resource Discovery

- Resource Selection

- Resource Sharing

- Resource Storing/Saving

- Resource Systems Integration/Interoperability

Subsequently, Learner Actions Tools and Features were also significant, with the average system addressing 60.9% of the assessed features.

The categories that were afforded the least resources/development efforts across all systems were Community Collaboration Tools and Features (peer-to-instructor and peer-to-peer collaboration and communication) and Overall Design (UI/UX) Features (customization of the system to meet specific user needs in relation to user interface features).

Social media systems

As the primary function of social media platforms, community sharing, communication, and collaboration are consistently a focal point of most tool and feature development; however, resource management is becoming a growing area of development considerations as more communities engage around common sharing, organizing, and annotation of resources such as images, videos, and other media.

Figure 3: a comparison of the ten identified social media systems with their features mapped in the areas of Community Collaboration, Resource Management, and System Design, as compared to the tools made available by the Smithsonian Learning Lab

Numbers of assessed features were tallied and reported in terms of overall % (figure 3):

- Community Collaboration Tools and Features (systems on average addressed 49.6% of the 23 specific facets assessed)

- Resource Management Tools and Features (systems on average addressed 40.6% of the 32 specific facets assessed)

- Overall Design (UI/UX) Features (systems on average addressed 34.1% of the 17 specific facets assessed)

The category receiving the most development effort across all social media platforms reviewed were those directed towards Community Collaboration with the average system addressing 49.6% of the assessed features. Sub-elements within this category included:

- Group Tools

- Discussion Tools

- Communication Tools

- User Activity Management Tools

Categories that were afforded the least resources/development efforts across all systems were related to Resource Management (resource sharing, storing, categorizing) and Overall Design (UI/UX) features (customization of the system to meet specific user needs in relation to user interface features).

Prototype testing findings

The trends observed from the five features tested (table 1) during the prototyping with students were:

- Learner ability to record information as notes/share notes

- Learner ability to monitor progress/expose progress to others

- How students communicate and share work product

- If students can work collaboratively on projects or assignments

- If students prefer access to print-based worksheets or digital version integrated in-system

After observation, we found that:

- the majority of students made use of digital note-taking tools when available

- the majority of students preferred in-system questions and guidance instead of paper-based worksheet when both were available

- the majority of students were able to set up personal accounts to record information and submit to teacher when made available

- the majority of students DID NOT opt to work collaboratively when allowed

- the majority of students DID NOT take advantage of reviewing peers responses or contributions when provided access

The Students’ use of progress monitoring for self-tracking and/or as visual indicators to others was not effectively tested, nor was the duration of the tasks sufficient to model research use-cases in which students were engaged in learning that spanned multiple class periods or multiple weeks of sustained coursework.

Discussion and implications for Smithsonian Learning Lab development

From such disparate sources of information, a cautious approach is warranted in drawing conclusive statements in relation to all online learning systems or the use of digital museum resources. In the course of this project, the variability of the environments in which learning occurs has been evident. The intention was not to draw specific universal conclusions, but rather to identify areas for future development in the SLL system. When taken together, the components of the research highlighted some key findings:

- Young learners expect navigation, search, and discovery to require minimal effort and a system that can anticipate search terms and provide quality results or related suggestions.

- Young learners remain more engaged and persistent in completing online learning content when their progress is acknowledged and visual indicators track completion of certain benchmarks within the learning content.

- Young learners benefit from receiving regular feedback on tasks and contributions they complete within their online learning environments from their educators, peers, or other members of the learning community.

- Young learners benefit when provided the means to annotate or record notes that document their thinking, findings, and questions when engaging learning content.

- Young learners, given opportunities to engage their peers in collaborative discovery and shared findings, are more engaged in extended reflection on their own thinking and conclusions.

- Young learners can more readily engage in self-regulated learning and show increased performance on tasks when content, tools, and inquiry are closely aligned to minimize transactional distance.

The following table (2) details proposed enhancements or new features for the SLL based on each of the key findings. Information includes the specific finding, the instrument(s) that supported the finding, and proposed development to improve the SLL experience for students. While any one instrument might have generated a number of other findings, those reported below represent elements that could feasibly be addressed through increased features, tool development, or design enhancements to the technical platform.

| Summary of findings, features, or strategies to improve youth engagement with SLL | Lit. Review | Env. Scans | Student Testing/Obsv. |

| Finding 1:

Young learners expect navigation, search, and discovery to require minimal effort, and a system that can anticipate search terms and provide quality results or related suggestions. |

X |

X |

|

Proposed development:

|

|||

| Finding 2:

Young learners remain more engaged and persistent in completing online learning content when their progress is acknowledged and visual indicators track completion of certain benchmarks within the learning content. |

X |

X |

|

Proposed Development:

|

|||

| Finding 3:

Young learners benefit from receiving regular feedback on tasks and contributions they complete within their online learning environments from their educators, peers, or other members of the learning community. |

X |

X |

X |

Proposed Development:

|

|||

| Finding 4:

Young learners benefit when provided the means to annotate or record notes that document their thinking, findings, and questions when engaging learning content. |

X |

X |

X |

Proposed Development:

|

|||

| Finding 5:

Young learners, given opportunities to engage their peers in collaborative discovery and shared findings, are more engaged in extended reflection on their own thinking and conclusions. |

X |

X |

|

Proposed Development:

|

|||

| Finding 6:

Young learners can more readily engage in self-regulated learning and show increased performance on tasks when content, tools, and inquiry are closely aligned to minimize transactional distance. |

X |

X |

|

Proposed Development:

|

|||

Table 2: an overview of the case study project’s findings, the source of the finding’s evidence, and proposed SLL developments to address them.

While these conclusions and recommended enhancements apply directly to the existing features of the SLL, many of them include generalizable best practices that other systems, programs, or tools could consider testing and implementing.

Conclusions

Upon revisiting the original research goals, it is clear that there is more work to be done to adequately understand the nuanced environment of the classroom. To better understand how students engage with digital content in academic settings (research goal 1), the project team continues to observe student making use of the SLL and its resource in middle and high school classroom. That analysis will be combined with this and concluded in the fall of 2017.

The project team had hoped to better understand the motivations for student use of digital content (research goal 2) primarily through the analysis of social media platforms/systems. Clearly looking outside the field often offers great insight into how our “competitors” are addressing these needs. Those platforms, whose business models depend on adequately solving these challenges, place great value on tools that enable communication and socialization. Considerations for how these features could be integrated in museum systems, or how museum systems could be built to better align with them, is worthy of additional investigation.

The final goal for the project centered on technical scaffolds that could be built into a system to enhance engagement with digital resources. The findings above enable the next steps for the Smithsonian project, however, more research and implementations will be needed (by additional museum digital resource platforms) to add this body of knowledge.

While the individual components of this investigation all reveal intriguing insights into how youth engage in online learning and utilize digital resources, it is clear that deeply exploring these behaviors is complex and not easily concluded in a limited study such as the one described in this paper. Understanding the complex realities of the classroom, much less those educational environments moving towards blended or flipped structures (those in which part or all of the learning activities are performed independently or through digital platforms), is a moving target, but one worthy of the effort.

Acknowledgements

This research was supported by a grant from the Smithsonian Youth Access Grant program. The authors wish to thank Pino Monaco, Ashley Naranjo, Kate Harris, and Linda Muller from the Smithsonian Center for Learning and Digital Access for their vital assistance in the data collection and analysis described in this project. This paper summarizes research reports conducted or commissioned by the Smithsonian Center for Learning and Digital Access in 2016 (Navigation North Learning Solutions and the Smithsonian Center for Learning and Digital Access, 2017). The following individuals, in addition to the authors, made significant contributions to the research design, data collection, analysis, and writing of the original reports and should be acknowledged: Dr. John Ittelson (Northwestern University), Dr. Bobbi Kamil (Syracuse University), Nick Brown (California State University, Chico), and Jasper Travers (University of California, San Diego). Also thanks to Stephanie Norby, Michelle Smith, and Ashley Naranjo (SCLDA) for their support and assistance in producing this paper. The authors also express their gratitude to the late Claudine Brown, former Smithsonian Assistant Secretary for Education and Access, as well as Karen Garrett of the Youth Access Grant program.

References

Azevedo, R., D.C. Moos, J.A. Greene, F.I. Winters, & J.G. Cromley. (2008). “Why is externally-facilitated regulated learning more effective than self-regulated learning with hypermedia?” Educational Technology Research and Development 56(1), 45-72.

Bull, G., A. Thompson, M. Searson, J. Garofalo, J. Park, C. Young, & J. Lee. (2008). “Connecting Informal and Formal learning: Experiences in the age of participatory media.” Contemporary Issues in Technology and Teacher Education 8(2).

Chung, J, & F. Tan. (2004). “Antecedents of perceived playfulness: an exploratory study on user acceptance of general information-searching websites.” Information & Management 41(7), 869-81.

Corno, L., & J. Randi. (1997). “Teachers as innovators.” International handbook of teachers and teaching. 1163-1221.

Elgersma, C. (2016). “16 Apps and Websites Kids Are Heading to After Facebook.” Parenting, Media, and Everything In Between. March1, 2016. Accessed January 28, 2017. Available https://www.commonsensemedia.org/blog/16-apps-and-websites-kids-are-heading-to-after-facebook.

Farnum, R.B. (1919). “The Place of the Art Museum in Secondary Education.” The Metropolitan Museum of Art Bulletin 14(9), 194–96.

Glowa, L., & J. Goodell. (2016). Student-Centered Learning: Functional Requirements for Integrated Systems to Optimize Learning. Vienna, VA.: International Association for K-12 Online Learning (iNACOL).

Hattie, J., & G. Yates. (2013). Visible learning and the science of how we learn. New York, NY: Routledge.

Hernández-Ramos, P., & S. De La Paz. (2009). “Learning history in middle school by designing multimedia in a project-based learning experience.” Journal of Research on Computing in Education 42(2), 151.

Higgins, S., Z. Xiao, & M. Katsipataki. (2012). “The Impact of Digital Technology on Learning: A Summary for the Education Endowment Foundation.” Durham University. Accessed January 28, 2017. Available https://v1.educationendowmentfoundation.org.uk/uploads/pdf/The_Impact_of_Digital_Technologies_on_Learning_FULL_REPORT_(2012).pdf.

iNACOL. (2013). “New Learning Models Vision – iNACOL.” Accessed June 7, 2016. Available http://www.inacol.org/wp-content/uploads/2013/11/iNACOL-New-Learning-Models-Vision-October-2013.pdf.

Interactive Educational Systems Design, Inc. (2017). “Integrating Authentic Digital Resources in Support of Deep, Meaningful Learning.” Smithsonian Center for Learning and Digital Access. Washington, DC. Accessed January 28, 2017. Available https://learninglab.si.edu/news/new-white-paper-suggests-ways-using-the-lab-can-achieve-educational-goals.

ISTE Connect. (2015). “19 Places to Find the Best OER.” ISTE Connects. September 17, 2015. Accessed January 29, 2017. Available https://www.iste.org/explore/articleDetail?articleid=538.

Ito, M. (2008). “Living and Learning with New Media – Digital Youth Research.” Accessed January 28, 2017. Available http://digitalyouth.ischool.berkeley.edu/files/report/digitalyouth-WhitePaper.pdf.

Ito, M., H. Horst, M. Bittanti, D. Boyd, B. Herr-Stephenson, P. Lange, C. Pascoe, & L. Robinson. (2008). “Living and Learning with New Media: Summary of Findings from the Digital Youth Project.” In The John D. and Catherine T. MacArthur Foundation Reports on Digital Media and Learning. November 2008. Accessed January 28, 2017. Available http://digitalyouth.ischool.berkeley.edu/files/report/digitalyouth-WhitePaper.pdf.

Jones, R. (2009). Student Engagement Teacher Handbook. Rexford, New York: International Center for Leadership in Education. 31–32.

Kapa, E. (2007). “Transfer from structured to open-ended problem solving in a computerized metacognitive environment.” Learning and Instruction 17(6), 688-707.

Kirschner, P. A. (2015). “Do we need teachers as designers of technology enhanced learning?” Instructional Science 43(2), 309-22.

Lenhart, A. “Teen, Social Media and Technology Overview 2015.” Pew Research Center. April 2015. Accessed January 28, 2017. Available http://www.pewinternet.org/files/2015/04/PI_TeensandTech_Update2015_0409151.pdf.

Lindquist, T., & H. Long. (2011). “How can educational technology facilitate student engagement with online primary sources?” Library Hi Tech 29(2), 224-241.

Marks, R., S. Sibley, & J. Arbaugh. (2005). “A structural equation model of predictors for effective online learning.” Journal of Management Education 29(4), 531-63.

Mayer, R., & R. Anderson. (1992). “The instructive animation: Helping students build connections between words and pictures in multimedia learning.” Journal of educational Psychology 84(4), 444.

Milligan, D. (2014). “What Is the Smithsonian Learning Lab?” Smithsonian Learning Lab News. Accessed September 9, 2015. Available http://learninglab.si.edu/news/2014/10/building-the-smithsonian-learning-lab/.

Milligan, D., & M. Wadman. (2015). “From physical to digital: Recent research into the discovery, analysis, and use of museums resources by classroom educators and students.”MW2015: Museums and the Web 2015. Chicago, Illinois, MW2015: Museums and the Web 2015.

Moreno, R., & R. Mayer. (1999). “Cognitive principles of multimedia learning: The role of modality and contiguity.” Journal of educational psychology 91(2), 358.

Navigation North Learning Solutions and the Smithsonian Center for Learning and Digital Access. (2017). Characteristics of Digital Learning Content, Pedagogies, and Platforms That Support Young Learners: An Analysis of Existing Literature and Research (unpublished research report). Smithsonian Center for Learning and Digital Access.

Navigation North Learning Solutions and the Smithsonian Center for Learning and Digital Access. (2017). Environmental Scan of Digital Learning Platforms Popular with Young Learners: A Comparison of Systems and Features (unpublished research report). Smithsonian Center for Learning and Digital Access.

Navigation North Learning Solutions and the Smithsonian Center for Learning and Digital Access. (2017). Environmental Scan of Social Media Platforms Popular with Young Learners: A Comparison of Systems and Features (unpublished research report). Smithsonian Center for Learning and Digital Access.

Navigation North Learning Solutions and the Smithsonian Center for Learning and Digital Access. (2017). Summary of Data from Chico USD Student Testing and Observations (unpublished research report). Smithsonian Center for Learning and Digital Access.

Navigation North Learning Solutions and the Smithsonian Center for Learning and Digital Access. (2017). Technical Specifications for Future Development of the Smithsonian Learning Lab (unpublished research report). Smithsonian Center for Learning and Digital Access.

Punzalan, R., & B. Butler. (2014) “Valuing Our Scans: Assessing the Value and Impact of Digitizing Ethnographic Collections for Access.” MW2014: Museums and the Web 2014. Published January 16, 2014. Accessed January 27, 2017. Available http://mw2014.museumsandtheweb.com/paper/valuing-our-scans-assessing-the-value-and-impact-of-digitizing-ethnographic-collections-for-access/.

Roblyer, M. (2005). “Educational technology research that makes a difference: Series introduction.” Contemporary Issues in Technology and Teacher Education 5(2).

SETDA. (2015). “Ensuring the Quality of Digital Content for Learning: Recommendations for K12 Education.” Last updated March 2015. Accessed January 29, 2017. Available http://www.setda.org/wp-content/uploads/2015/03/Digital_brief_3.10.15c.pdf.

Squire, K., J. MaKinster, M. Barnett, A.L. Luehmann, & S.L. Barab. (2003). “Designed curriculum and local culture: Acknowledging the primacy of classroom culture.” Science Education 87(4).

Wadman, M., & P. Monaco. (2016). Summary of Data from Pittsburgh Students to Inform Prototyping for Smithsonian Youth Access Grant (unpublished research report). Smithsonian Center for Learning and Digital Access.

Welch, W., L.E. Klopfer, G.S. Aikenhead, & J.T. Robinson. (1981). “The role of inquiry in science education: Analysis and recommendations.” Science Education 65(1), 33-50.

Winters, F., J. Greene, & C. Costich. (2008). “Self-regulation of learning within computer-based learning environments: A critical analysis.” Educational Psychology Review 20(4), 429-44.

Wolters, C., & P. Pintrich. (1988). “Contextual differences in student motivation and self-regulated learning in mathematics, English, and social studies classrooms.” Instructional science 26(1-2), 27-47.

Cite as:

Milligan, Darren, Melissa Wadman and Brian Ausland. "Discovering, creating, and sharing digital museum resources: a methodology for understanding the needs and behaviors of student users." MW17: MW 2017. Published February 1, 2017. Consulted .

https://mw17.mwconf.org/paper/discovering-creating-and-sharing-digital-museum-resources-a-methodology-for-understanding-the-needs-and-behaviors-of-student-users/